Using the Synapse-Twitter Power-Up to Ingest IOCs Shared via Twitter

by savage | 2023-04-03

While many organizations primarily share data through subscription feeds, blog posts, and white papers, a number of organizations and independent researchers alike use Twitter as a means through which to share indicators of compromise (IOCs) from recently identified activity. In some cases, these tweets can serve as a kind of "early warning system" or preview into the authoring researcher or organization’s work, alerting others and allowing them to begin tracking the new activity long before a blog - if one is even in production - emerges. But how is an analyst supposed to efficiently capture these indicators in Synapse? Fortunately for us, we can use the Synapse-Twitter Power-Up to scrape in and model indicators shared in tweets - even capturing the authoring account and post. In this blog, we’ll walk through how to use the Synapse-Twitter Power-Up to model Twitter accounts, ingest posts, and scrape out and link tweeted indicators.

Modeling a Twitter Account and Ingesting Tweets

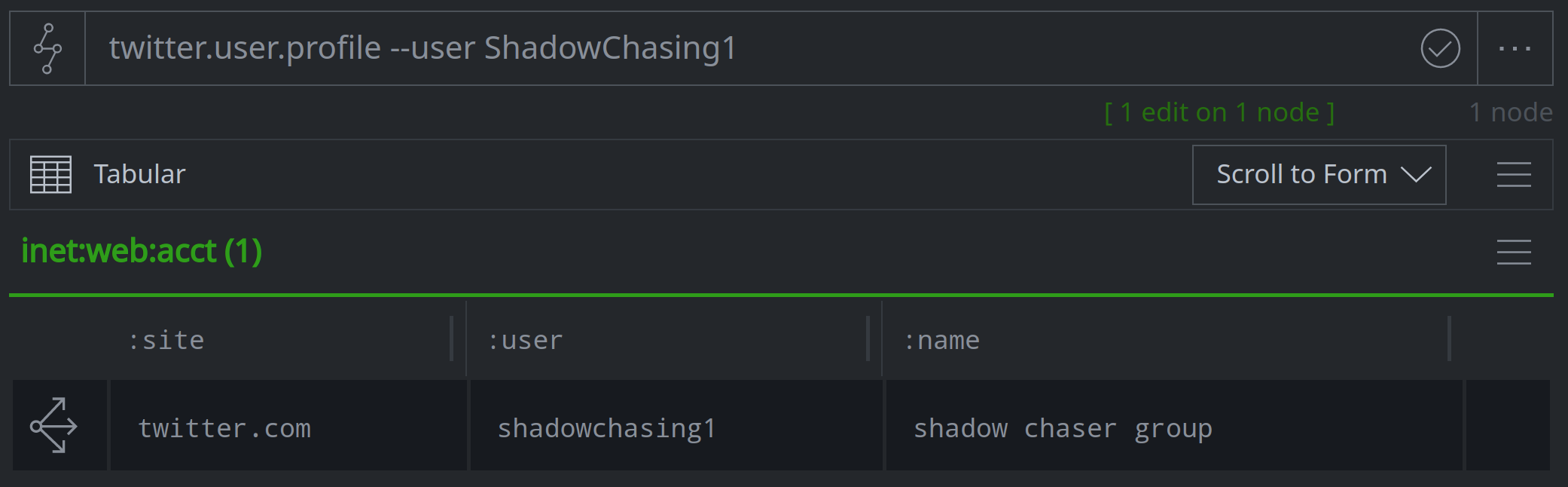

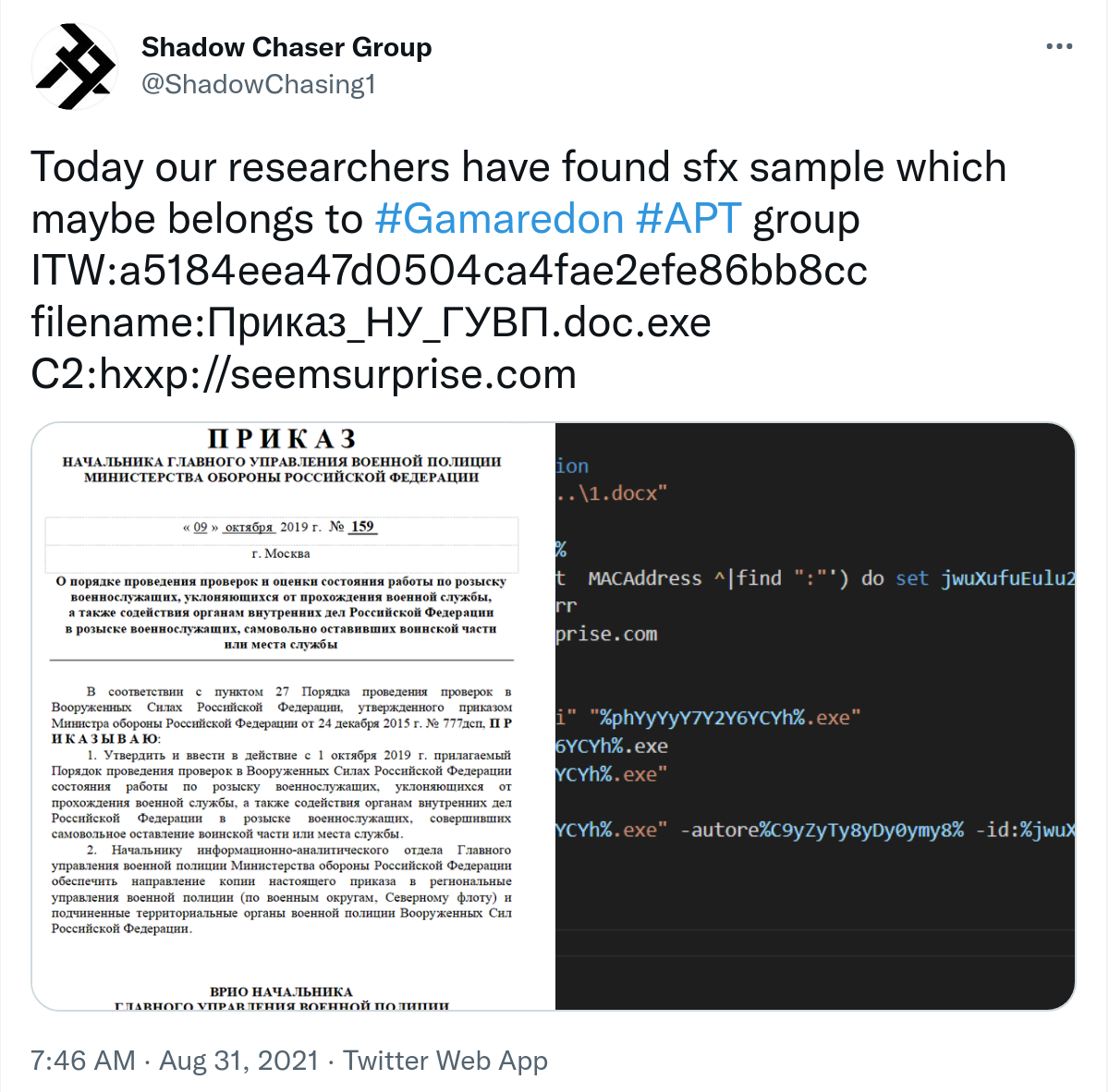

One Twitter account that frequently shares indicators is run by individuals who call themselves the Shadow Chaser Group and share an interest in advanced persistent threat (APT) activity, per their Twitter profile. We’ll use this account as a case study for this blog given that it regularly tweets file hashes, filenames, and URLs related to malicious activity.

Before importing any tweets, we’ll want to model the Twitter account itself so that we can keep track of where we got the scraped indicators. We can do this using the Synapse-Twitter Power-Up by specifying the account name, as shown here:

Now we’re ready to start pulling down and modeling tweets. There are two ways we can do this:

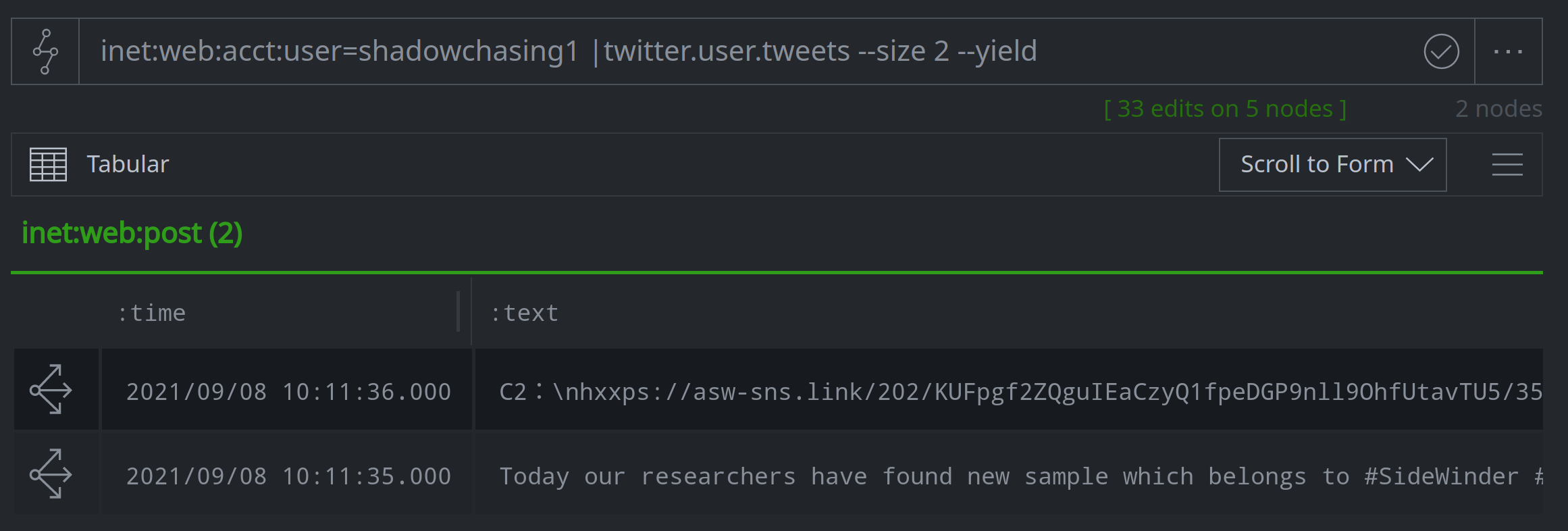

Option 1: Pipe Accounts to twitter.user.tweets

If we want to ingest tweets on a one-time basis from one or more accounts, we can do so by piping the accounts to twitter.user.tweets and specifying how many of the most recent tweets we’d like to import. In the example below, we're using the Synapse-Twitter Power-Up to ingest, model, and display the two most recent tweets from ShadowChasing1:

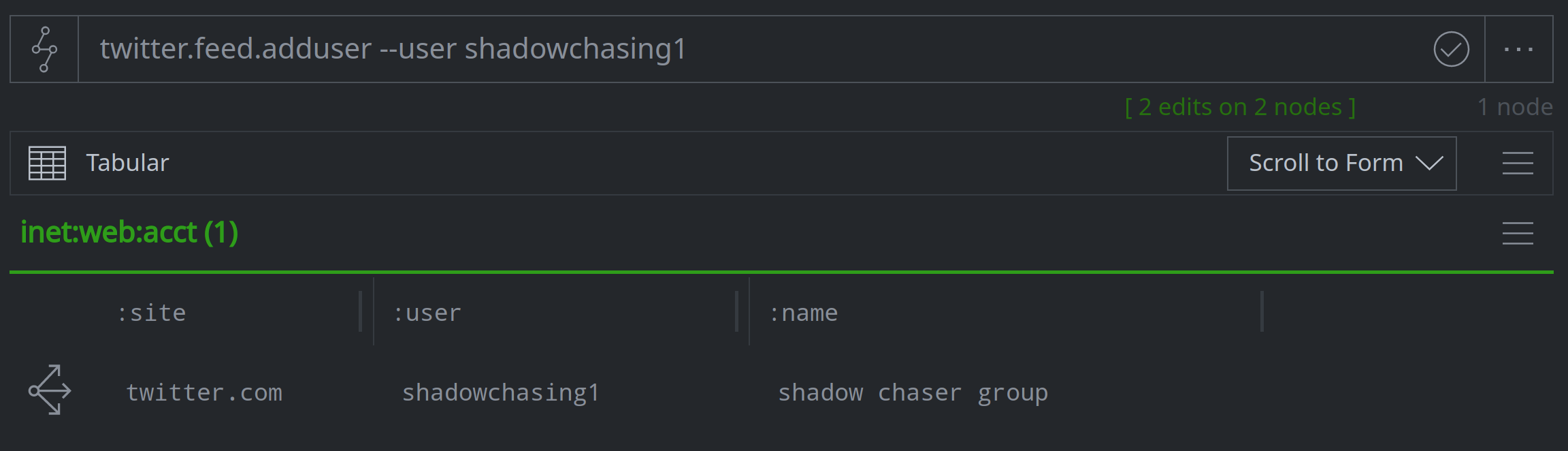

Option 2: Create a Twitter Feed to Regularly Ingest Posts from Multiple Accounts

However, if we want to ingest tweets from multiple Twitter accounts on an ongoing basis, then we might consider creating our own Twitter feed. The twitter.feed.adduser and twitter.feed.deluser commands will allow us to curate a list of Twitter accounts. In the image below, we're adding ShadowChasing1 to our Twitter feed:

We can add other accounts and check the contents of the feed by running twitter.feed.list in the Console Tool:

> twitter.feed.list

User: shadowchasing1 Last tweet id: 1

User: h2jazi Last tweet id: 1

User: malwrhunterteam Last tweet id: 1

User: thedfirreport Last tweet id: 1

User: blackorbird Last tweet id: 1

User: ESETresearch Last tweet id: 1

User: RedDrip7 Last tweet id: 1

User: BlackLotusLabs Last tweet id: 1

User: MsftSecIntel Last tweet id: 1

User: 360CoreSec Last tweet id: 1

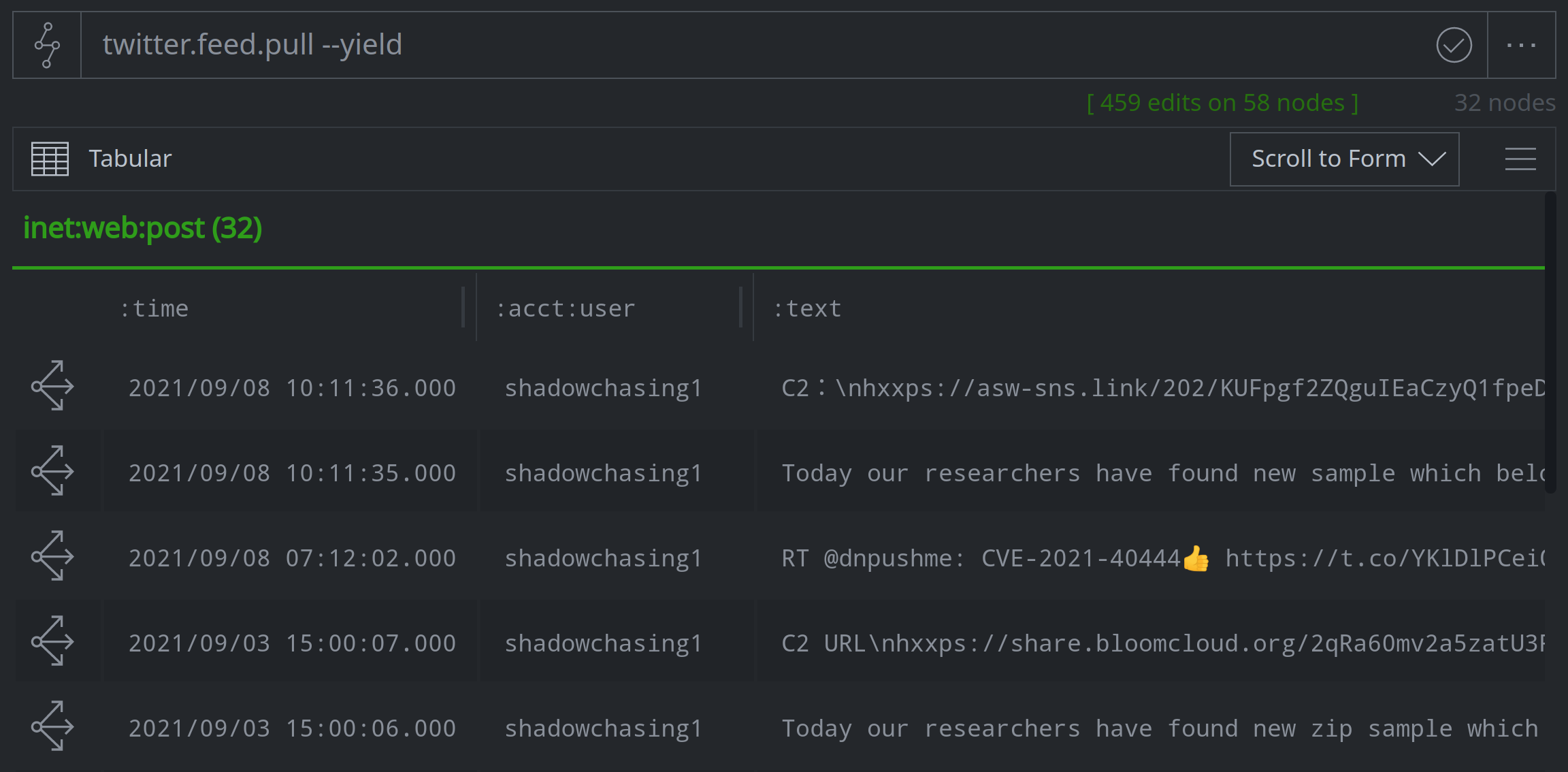

When our list is ready, we can use the twitter.feed.pull command to import tweets from the accounts in our feed:

This command will import any new tweets that have not already been ingested into Synapse, meaning that we might import a lot of tweets the first time we run it (or if we don’t run the command very frequently). We might choose to set up a cron job to regularly pull in tweets from the feed so that we don’t have to worry about remembering to do so.

Scraping Indicators & Linking to Tweets

In addition to pulling in and modeling tweets, the Synapse-Twitter Power-Up will also scrape out any identified indicators mentioned in the tweet itself, and link them back to the inet:web:post node reflecting the tweet using a -(refs)> light edge. Let’s say for example that we used the Synapse-Twitter Power-Up to pull in and model this ShadowChasing1 tweet, which references a file hash, domain, and URL:

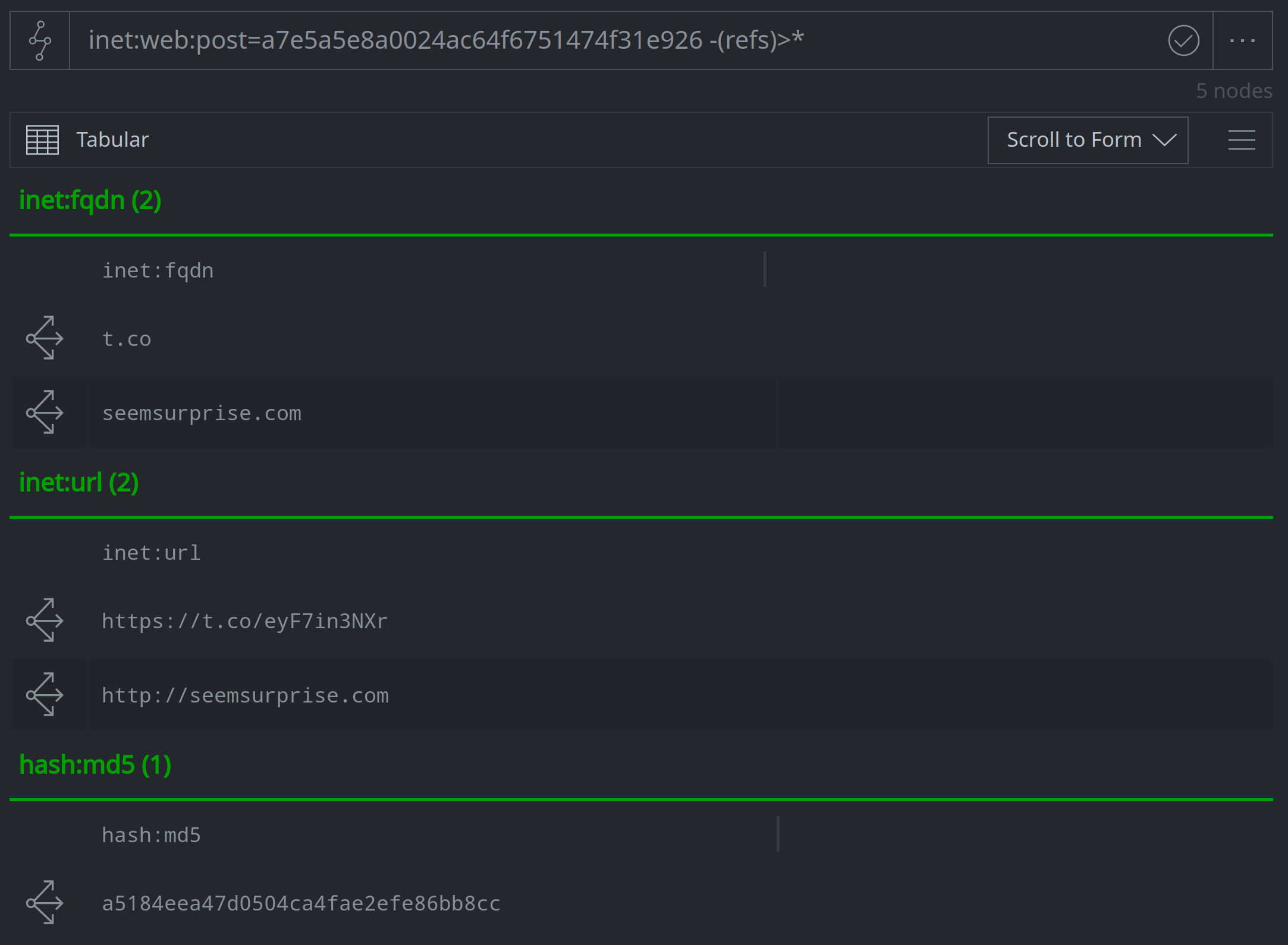

We can pivot from the inet:web:post that captures the tweet to view the nodes representing those scraped indicators:

Note that the scraped indicators include a URL and domain belonging to Twitter’s URL shortening service, t.co. Since these are not what we’re interested in capturing, we can either delete the light edge connecting them to the post or apply a tag to identify them and make it easier to filter them out from query results.

As for the remaining nodes, we’re probably going to want to enrich them so we can continue with our research. We can enrich manually using the Storm Query Bar or Node Actions, or we can implement automation that can run the enrichment for us.

Automation Options

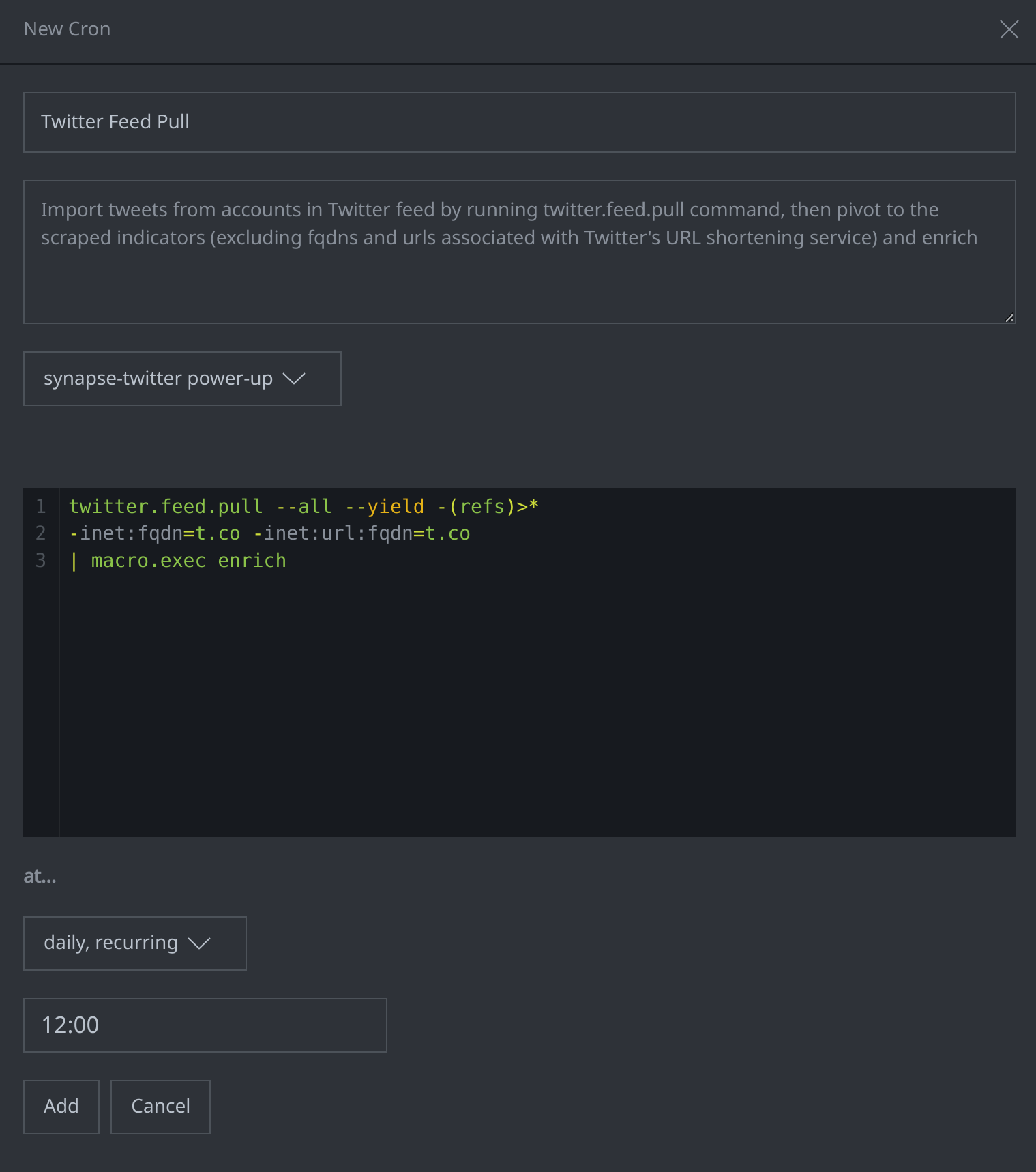

What are some ways that we could automate this process? One option would be to create a cron job that can regularly run the twitter.feed.pull command to ingest tweets from the accounts in our Twitter feed. The following cron job, for example, would run the twitter.feed.pull command daily, then walk the -(refs)> light edge to the scraped indicators, filtering out any domains or URLs that are from Twitter’s shortening service. The cron job will then pipe the remaining nodes against an enrichment macro named enrich, which will pull in additional data and context from multiple other Synapse Power-ups.

This cron job is one example of how we can use automation to streamline our workflow by importing and scraping tweets, and then enriching the scraped indicators so that we can begin researching with enriched data. Keep in mind that we can design our automation to do other tasks as well, such as generate JIRA tickets or perform analytic tasks like running files against YARA rules and applying tags. One of our previous blogs outlines several examples of ways to automate Synapse Power-Ups.

Using the Synapse-Twitter Power-Up to Capture Tweeted Indicators

The ability to regularly import, model, and enrich data from Twitter is particularly useful given the number of organizations and researchers that use Twitter to share IOCs identified during the course of their work. With the Synapse-Twitter Power-Up, analysts can directly download relevant tweets and scrape out and model indicators. Analysts can also take advantage of Synapse’s support for automation to craft cron jobs and triggers to pull in related data, perform limited analysis, create and assign tickets to track tasking, and perform additional operations as needed.

For more information on Synapse, Power-ups, and use cases, join our community Slack channel, follow us on Twitter, and checkout our videos on YouTube.